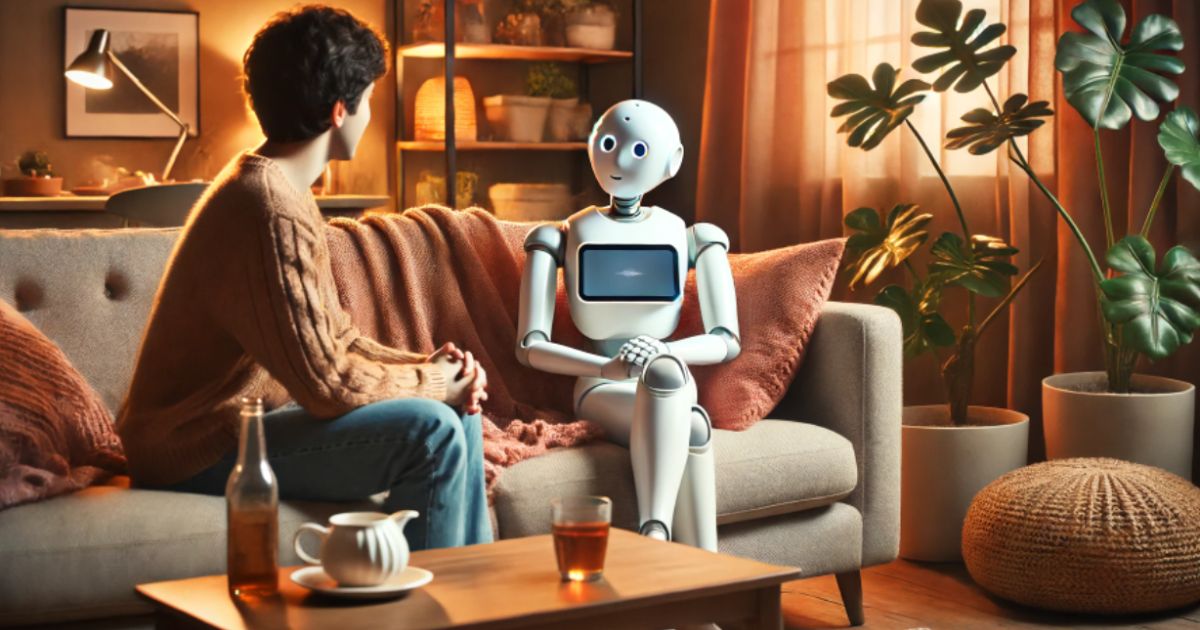

Mental health support has never been more accessible—or more impersonal. AI-powered chatbots and virtual therapists are now available 24/7, offering comfort, coping strategies, and even crisis intervention within seconds. For someone struggling at 2 a.m., that instant reply can feel like a lifeline.

But can a machine really understand pain? Can an algorithm feel empathy, or know when someone needs more than just a scripted response?

As artificial intelligence steps into the deeply human realm of mental health, we face a profound dilemma: Are we making help more available—or more hollow? While AI can assist with early screening and support, it lacks the nuance, intuition, and emotional warmth of a trained therapist.

This article explores the promise and the pitfalls of AI in mental health—where digital convenience meets emotional complexity, and where the future of care might depend not just on technology, but on maintaining our humanity.

🌟 The Promise: How AI Is Supporting Mental Health

| 1 | 24/7 Availability: Help Anytime, Anywhere AI chatbots and tools provide round-the-clock support, especially when human professionals aren’t available. |

| 2 | Reduced Stigma: Private, Nonjudgmental Spaces Users may feel more comfortable opening up to AI, helping reduce the stigma around seeking help. |

| 3 | Early Detection: Spotting the Signs Sooner AI can analyze language and behavior patterns to detect early signs of anxiety, depression, or distress. |

| 4 | Scalable Support: Reaching More People AI extends mental health services to underserved areas where therapists are scarce or unaffordable. |

| 5 | Data-Driven Insights: Smarter Care Plans AI helps clinicians track patient progress and personalize treatment using trends in emotional and behavioral data. |

⚠️ The Peril: Where AI in Mental Health Falls Short

| 1 | Lack of Empathy: Robots Can’t Relate AI lacks the human warmth, empathy, and nuanced understanding crucial to mental health care. |

| 2 | Misdiagnosis Risks: False Alarms or Missed Cries for Help AI may misinterpret emotions or overlook subtle signs, leading to harmful misjudgments. |

| 3 | Privacy Concerns: Sensitive Data at Risk AI tools handle deeply personal information that could be misused, leaked, or mishandled. |

| 4 | Over-Reliance: Substituting Support With Software People may turn to AI instead of seeking qualified human professionals when needed. |

| 5 | Ethical Boundaries: Who’s Responsible? If an AI gives harmful advice, it’s unclear who is accountable—developers, providers, or platforms. |